I just put .17 out there…I’ve been playing with it for a few weeks now with some of the most recent discussion added in, let me know

Oh no - doesn’t like the update to the DB, added column, now can’t find outputdir

[logs via email]

Doesn’t like it when I type something proper now when I typed in improperly before. .18 fixes that little thing, thanks ![]()

Mbps ? Recordings now have a column for… bit rate? or some different value?

Is this calculated? or from the tablo? There appears to be little consistency, between shows from the same source (channel) and channels of the same HD resolution and non match the max setting for recording quality.

this is calculated from information that Tablo is providing…so…the size of the recording is the recording, and all meta-data…so, any preview images, etc that are generated…that can cause a bit of variability…but that number is the number of bytes that the recording is consuming. So, I take that number and multiply it by 8 to get the number of bits, then divide it by 1,000,000. I personally would generally divide it by 1,048,576 (1k=1024), but when dealing with HD size, I found that they divide by 1000, not 1024…so, I stayed with the same 1k previously used.

So, the variability likely comes from the excess ‘stuff’ that is included with the size of the recording, but I wouldn’t expect it to be extremely off from the truth. What is it you are seeing?

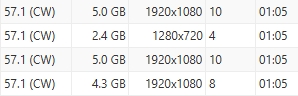

As you can see from my screenshot…

this is actually 4 recordings of 4 episodes from the same network, same series…I didn’t until just now notice that one of them was recorded at a different resolution, but even the 3 that were recorded at the same resolution, there is a 2 point difference in the mbps from one to another.

I prior to today had my Tablo set to maximum resolution…and my range of recordings goes from 2-10 depending on the resolution of the file where sd recordings are obviously down to the 2 mark, and my 1080 in upwards of the 10…but I don’t have anything larger than 10 in my recording…

I’m missing the obvious I guess. It’s not MegaBitsPerSecond bit rate. It’s a designations for… some size.

Size of recording (is segments) tablo JSON gives me 1052459008 nothing about meta-data.

(1052459008 * 8) / 1000 = 8419672.064

Size 1.1GB | Mbps 4 | Length 30min

So I’m making it way too complicated.

Are you talking about what should be displayed as a base 10 number or a base 2 number?

/1m, not 1k, but yes, it’s just a size per second, what was originally identified is if you Mbps is way lower than expected, it might not have finished recording properly

I understand how binary “powers of 2” equate to 1024, and for consumers sake it’s call 1k is a 1,000kilo bytes. 8 bits to 1 byte. 7bit ASCII with 1 for parity (or error checking).

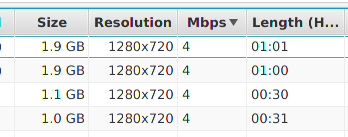

What’s the Mbps column for?

Weather it’s (almost) 2GB or 1GB, 1hr or .5hr seems to have no relation to it.

![]()

If it were this, only lesser resolution channels would vary - theoretically.

0 1 2 4 8 16 32 64 128 256 512 1024

Essentially yes, I guess that’s what it boils down to

Well, it was a piece of info that was wanted, but not exceedingly easy to calculate till I figured out the formula, it helped him figure out that he had bad recordings, so if it’s useful, and not harmful, I figured I would include it. If you are configured as I was with upwards of 10Mbps possible, the differences are at minimum, interesting info.

FYI,

I intend to eventually keep track of your visible columns and orders and keep them so next time you open the window, it’ll be the same as last time you used it.

Thank you so much for adding the “Mbps” column. However, when the value is 1 or 2 Mbps It’s really hard to distinguish if there is a problem. Can you add 1 or 2 decimals of precision to the “Mbps” column?

After you export the table to CSV you cannot properly sort by the “Size” column since it has mixed Units of Measure. Could you either stick to MB or GB or even leave it in bytes? I.e. You could put the Unit of Measure (“GB”, “MB”, or “Bytes”) as the column header and then just put a number in the data. My preference would be “MB” since it has a decent level of precision. I’m not sure everyone would agree with my preference  .

.

Great job with this so far

. I really like the tool and appreciate your efforts!

. I really like the tool and appreciate your efforts!

So, I’ve added 2 decimal points to Mbps, and I’ll leave the MB/GB in the UI, but export the raw bytes…so that should solve your issues with that stuff…this’ll all happen in the next release.

OK, I can see where some may find this useful information. Now I’m looking at it… wondering why all the fluctuation and no max? This is probably beyond the scope of your application - If my max recording is 5 Mbps shouldn’t, at least, channels broadcast at 1080 hit 5? and the 720 should hit at least sometimes. I’ve got 4 out of 23 @ 4Mbps recorded 1280x720 - while 5 of the 3Mbps are from a 1080 broadcast.

Part, I guess, depends on the numbers tablo it providing. The “recording” isn’t a single file, so it the size of the *.ts segments you’re calculating? or all encompassing? (does anyone really know?) or am I just not getting what I should? either due to broadcast or tablo encoding? Or the formula based on theoretical-for-comparison-purposes.

leaving it at raw bytes allows for as much precision and anyone might prefer… To take it a step further, if you’re using this to find “incomplete” shows eventually some way to import a list for deletion of the ones you discover you want to discard.

I’m using the ‘size’ provided by the Tablo API. This is what the docs have to say about that size value

“the size of all recording’s data (including video, thumbnails, metadata, etc.) in bytes”

So…if anything it should be higher than the actual Mbps of the recording…I can’t tell you honestly what causes the discrepancy…I was getting things in the 7+ range yesterday till I set things to 5 max…now all of the recordings I recorded yesterday since making the change are 3, with one 4 put in there…you have the formula that I’m using…it’s all based on info provided by them, so I would suggest that we, as a community reach out to @TabloSupport and see what they have to say…maybe the will tell me that my formula is wrong…maybe they will be able to explain the engineering around why the number isn’t matching with what you would expect…who knows ![]()

Agreed, thus why I’ve moved it back to raw for the export…but for the GUI, I like something a bit more ‘human’ readable ![]()

If I understand correctly, a constant bitrate is not generally used. Instead a maximum is selected and then the video is compressed to a certain level to not exceed that maximum. Also the rate of visual differences between frames makes a different, so fast motion tends to result in larger video file sizes.

You mean that, after transcoding to h.264, a 2 hour recording of nothing but a blue screen at 5 Mbps will take less physical space then a 2 hour recording of a basketball game.

I will admit, understanding exceeds the limits of “general-purpose” users. I’ve once read gstreamer/ffmpeg - by developers for developers, to understand the settings is at least a modest "expert’.

I’ve used the streaming URL via a media player (smplayer and vlc) and each had a variable bit rate, almost expected from streaming video… well the same from video exported via ffmpeg.

VBR - not to exceeds X Mbps does seem logical, although somewhat misleading… but then I guess it’s not constant to start with - so why would you expect to be constant.

In the end, the number he comes up with should still work for comparison when looking for possible “failed” recordings.

I don’t watch basketball, nor stare at a 2hr blue screen, can’t help there :shrug:

Been trying to find what their settings are about. Some articles imply recording quality has to do with bandwidth, while also calling it bit rate.

How much it may buffer over your network and more storage space is emphasized.

and this is referenced from above;

Mentions video quality, but primary issue regarding these settings appear to be getting the video across your network.

Affects how much storage and bandwidth is used for Recordings. Use a lower setting if you experience buffering.

As for being the same as video bit rate that goes with encoding / transcoding this doesn’t come up much at all anywhere in their articles.

Because I’ve gone so far off topic… maybe a basketball game against a blue screen might be the way to go ![]()

Doesn’t all of this transcoding, H.264/H.265, in essence result in basically a start frame to a group of pictures followed by the intermediate frames which are the changes to the start/previous frame. More differences might result in more data in the frames and slightly larger file sizes.